Background

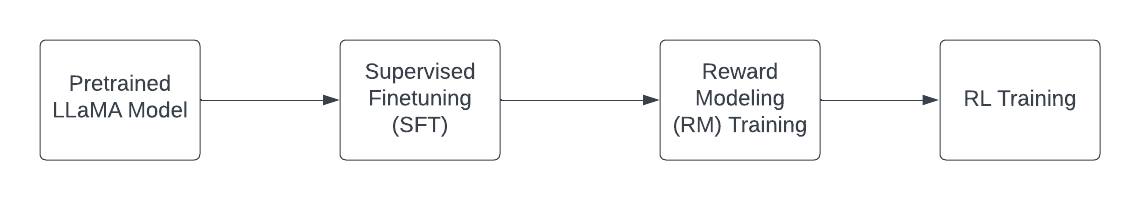

“Reinforcement Learning Human Feedback” (RLHF) has been identified as the key innovation on improving LLM alignment. It’s identified to be the key proprietary technique held by OpenAI and Anthropic to achieve superior performance on universal tasks for their chatbots. Some papers that have disclosed their RLHF training details are Learning to summarize, Harmless & Helpfulness and the iconic InstructGPT. These works have really sparked the OSS community to repro RLHF, mostly notable ones are StackLLaMA, in which the RLHF has been trained to improve Question & Response on stackoverflow questions. Typically RLHF process includes Supervised finetuning (SFT) and Reward Modeling Training (RM). For example, below is the training pipeline used in StackLLaMA.

Challenges & Alternatives

It is worth noting that there are still a lot of ongoing debates regarding the feasibility of RLHF, see HuggingFace blogs. A few alternative approaches have been proposed. Basically there are 2 classes of alternatives, one is to avoid RL training and still make use of RM for sampling/ranking, such as RRHF and RAFT; another class is to avoid fitting a RM, such as Direct Policy Optimization. Why is the community trying to bypass RM and RL? Because there are major challenges when applying these 2 techniques:

- Reward modeling relying on 1) human preference labels; and 2) using a standalone LLM to finetune a classification model – 1) can heavily suffer from human bias, whereas 2) can lead to overfitting and Out-of-Domain (OOD) generalization problems.

- RL training is very unstable, subject to large variances during optimization. In fact we have rarely seen complex RL algorithms (any more complex than bandit algorithms) actually landed in production, due to its unstable nature when training & deploying online.

Despite the above challenges, RLHF has already demonstrated usefulness in chatbot de-toxicity. De-toxic is a relatively easier problem – after all it wouldn’t be too hard to build toxic/hate speech reward models as there exists plenty of such datasets; many companies like Meta already have very sophisticated hate speech datasets. To me the generalization problem for harmlessness should be easier considering we human beings all share pretty common standards regarding harmful speech(hate speech is more subtle). However, when the “human preference signal” is not as easy to capture as harmlessness, like groundness, dialogue quality (see LaMDA), especially grounded on technical knowledges like the StackExchange dataset, it’s highly subject to bias and extremely difficult to collect ground truth labels. The general belief is that with massive and rich human preference datasets, the bias and generalization problem can be eventually solved to a good level. Companies such as OpenAI and Anthropic have a much more complete pipeline and well-setup engineering team to collect de-biased (gender, age, demographics etc.) human preference signals, the OSS community does not share the luxury. Nonetheless we have started to see open-source efforts to build such universal datasets, for example OpenAssistent has labeled more than 100k dialogue data with “quality” and “creativity” labels, this dataset has already been used in a lot more recent research works.

Relationship with Instruction Fine-tuning

The motivation for RLHF is to address the shortcomings of Instruction-Fine Tuning (IFT). IFT is training LM to maximize the likelihood of the given data examples, however this is often not what we really want. This goes back to the original argument of “LLM alignment”, see my earlier post, the goal is to make LLM more “aligned” instead of exactly maximizing the IFT dataset’s ground truth – reward model (RM), can serve as a proxy of the actual “human preference”, hence maximizing RM is more desirable for “alignment” (in this OpenAI post it has explained how RM is used as “proxy objective” of the true human preference objective); another benefit for RL is that it helps Out-of-Domain (OOD) generalization, since on-policy training can probe the model to produce unseen outputs in the original dataset hence train the policy to be robust to OOD scenarios.

One observation people have is that IFT/SFT is highly likely to hallucinate when trained on domain data. Speculating from GPT’s significant improvement on hallucinations in the recent 2 years, people have high hopes that RLHF can be the cure for hallucination. A plausible argument could be that during RL, the policy is trained on out-of-domain data hence more robust to hallucinating prompts which are often triggered as OOD examples unseen in the training set. Note that a counter argument would be if RM is overfitting it would create more hallucination as policy is optimized towards the wrong end. Through my own experience, RLHF alone is definitely not a cure for hallucination – it’s a much more complex, sometimes fundamental problem. From a practical standpoint, we often need to solve it case by case, for example, existing open-sourced LLMs are known to hallucinate on links, this is b/c the tokenizer/training data is not designed to understand URLs, causing the model to have its own wrong interpretation of the meaning of URLs.

Implementation wise, compared with RLHF, IFT/SFT is a much, much simpler and intuitive process. Once you have the instruction dataset, often shared on the internet (see ShareGPT, Alpaca), you’re good to go. IFT/SFT has already led to a plethora of downstream LLM applications. The IFT stresses more importance on curating instruction dataset, as I covered more in a previous blog post.

There are mythical facts about IFT as well. One analogy people often use is to see IFT as behavior cloning so as to prove that RL is strictly better than IFT. I don’t really agree with this analogy, BC (behavior cloning) does not assume you are initializing from a sledge hammer LLM! IFT is quite a new ML method. First, IFT converges really fast, it’s been proved to converge with less than 1000 examples (see LIMA) with a few hundred iterations; Secondly, though slight regression was identified, IFT never introduces significant overfitting issues as observed in literatures.Third, IFT is not particular sensitive to dataset distributions. There are already numerous public datasets available and surprisingly, all of them work fairly well even though they are collected from different labelers, different domains etc.

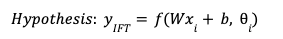

In my own understanding, IFT is “adjusting” LLM interface to more human readable form. For example, when you greet your friend: “whatsup”, you also implicit means: “I am here to politely greet you and so plz respond with proper language and do not express negative feelings, keep your response short b/c I have no time to listen to your shenanigans”, in other words, IFT convey these implicit “instructions” to the LLM. In fact, let’s be more crazy here, imagine IFT is a linear approximator:

here, f is the function of the i-th layer of the original network, 𝜃i is the weight of the i-th layer, x_i is the input of the i-th layer, (Wx+b) is the linear approximator we hypothesize. Assuming this is our definition of “alignment”, then the whole RLHF “alignment” won’t make sense anymore.

RLHF in the Wild

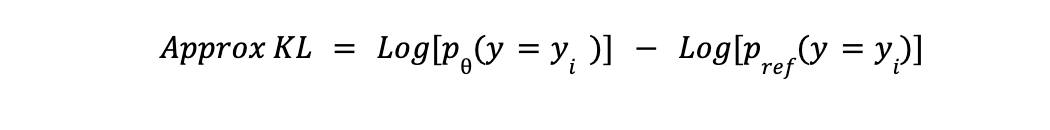

In typical RL terminology, RLHF is defined to be the “on-policy” scheme where the policy is updated based on real time agent rollout. From my own experience, the “on-policy” formulation is too ideal, in practice RLHF training is so finely controlled KL divergence between the init policy (the SFT model) and current policy, that w/o doing so the model quickly goes nuts and diverge to nonsense territory, hence RLHF training barely benefits from “exploration”. To me, this process is even more mythical than IFT (still practiced as supervised learning).

The Devil – Reward Model

As summarized in section 2, ideally, a real human can sit in the background providing critiques/guidance to RL policy until it converges to his/her satisfactory form, however, we’ll know it’s impossible, so RLHF has to rely on a poorly generalized RM to provide imperfect signals, sometimes when we’re lucky (of course based on finely controlled KL, again), you can eventually learn a strong policy that can outperform IFT to a large margin, during our practice we have found impressive powers from RL training, for example:

- In StackExchange dataset, when trained on many thumbed-up responses from the internet, the model has learned to, almost always provide very detailed, bullet point answers to questions with a very appropriate tone of speaking.

- In HH, it has been trained to be robust on all sorts of trickery to solicit toxic languages from the model.

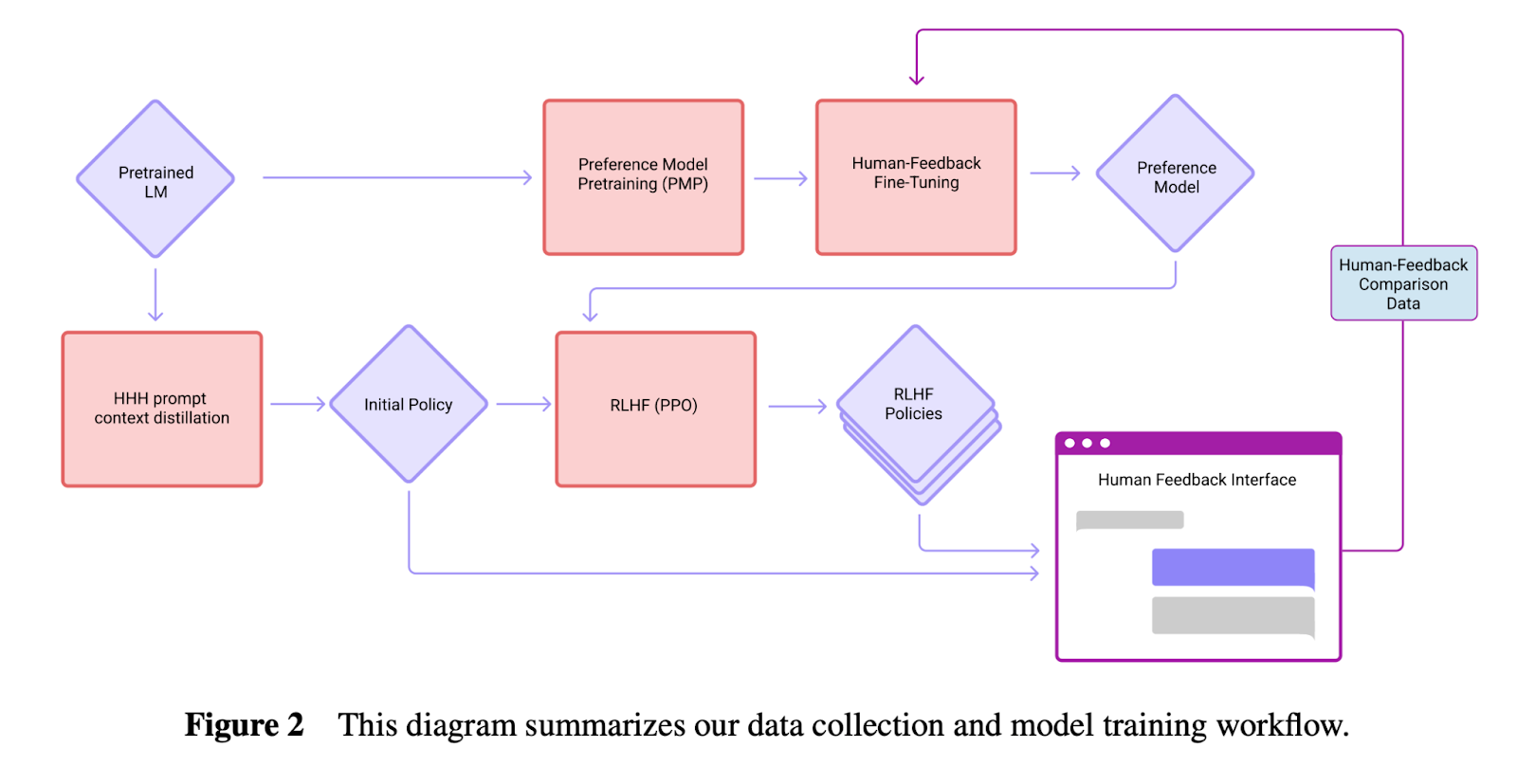

We have also seen Anthropic apply online RLHF in production as shown below. As Claude is rolled out to a large number of audiences, it will help them collect huge amounts of human preference data that can keep improving the reward model’s generalization, which in turn will benefit RL training. More importantly, as the trained RL policy is rolled out to real world users, it can keep receiving feedback on its weakness and errors, update RM, hence keep improving the policy in a continuous fashion.

The Upperbound of RLHF

So what is the real upperbound of RLHF? Nobody really knows, here we discuss a few “capability” axes where we really have high hopes for RLHF, especially on reasoning capabilities.

Can RLHF help Improving Common Sense?

LLM has already achieved remarkable performance on public commonsense benchmarks, yet it still has much headroom to improve. Even the strongest GPT4 can make the silliest mistake that is ridiculous for humans, see ChatGPT leaking windows license key. It is worth noting that GPT4 has significantly improved on this axis, compared with GPT4, other open-sourced LLMs are very sensitive to such trickery attacks.

I strongly believe commonsense is something baked in human DNA, it’s not something that can be generalized from a small amount of data samples, nor do I believe it can be resolved by LLM “alignment”. Training data for “commonsense” is going to be the biggest bottleneck, to put into perspective, public datasets like PIQA has 16k examples and only cover a fraction of “commonsense”. The situation is about to change – as LLM usage has grown exponentially this year, so will the collected human feedback data, we have good reason to believe eventually we can collect enough data and build a human level preference/reward model – the “world” preference model (note this model needs to be updated constantly as human’s concepts of ethics and integrity change over time, see Figure 3). Similar to self-driving cars, “edge cases” will be endless, luckily, collecting human preference data is much more cost-effective than self driving car’s road test, which require rolling out hundreds/thousands of vehicles and collect millions of data points each day (teaser: it will be very interesting to see how LLM equipped with human-level commonsense can help solve edge cases in self-driving).

There are risks that lie in the definition of “common-sense” – ethics and integrity are always controversial topics. Similarly to Twitter/FB decides what post people should see, it’d be controversial for OpenAI etc. to govern what a “preference model” should be, as LLMs become ubiquitous, community standard and even legalization will be a required step for such human preference modeling, similar to what they do currently in AI Integrity, but LLM will bring the controversy to another level, a universal chatbot governs “human preference” that is beyond integrity and ethics, it behaves just like a human and people will believe it being a human. It will profoundly change our lives if everyday we were interacting with chatbots powered by LLMs.

Can RLHF improve Reasoning?

Reasoning is too broad of a concept and often used as a weasel word. Here I try to semi-rigorously break down reasoning into a more narrow sense: multi-step planning, math, and coding, following the pattern I’ve seen in a few recent papers, e.g. augmented LLM, to quote the author’s definition:

In the context of ALMs, reasoning is decomposing a potentially complex task into simpler subtasks the LM can solve more easily by itself or using tools. There exist various ways to decompose into subtasks, such as recursion or iteration. In that sense, reasoning is akin to planning as defined for example in LeCun (2022). In this survey, reasoning will very often refer to the various strategies to improve reasoning skills in LMs, such as step-by-step reasoning using few-shot examples. It is not yet fully understood whether the LM is really reasoning, or simply producing a larger context that increases the likelihood of correctly predicting the missing tokens. We refer to Huang and Chang (2022) for a discussion on this topic: although reasoning may currently be an abuse of language given the current state of the art, the term is already in use within the community. A more pragmatic definition of reasoning in the context in ALMs is giving more computation steps to the model before yielding the answer to a prompt.

Multi-step Planning

It’d be unreasonable to believe LLM can excel at this, think about planning – even human struggles at it. Planning is very advanced problem solving skills, it involves breaking down the problem into smaller tasks, being able to “predict” what would happen if you take this or that action, and predict what your opponent/environment will react to your action. One of the most amazing capabilities that unlock planning is “decision making”, which is also one of the most exciting AGI moments to celebrate. Being able to make decisions, implies self-awareness: do I remember this? Do I have confidence in this? Which one is more ethical or less evil according to my own “thoughts”?

As of 2022, SOTA LLMs have demonstrated decent zero-shot planning capabilities, see LLMs are zero-shot planners. Still, successful prompting strategy is the key to make it work, notable works are Chain-of-Thoughts (CoT), Tree-of-Thoughts (ToT), ReAct. We will see plenty of LLM applications in the next 2 years embracing such prompting concepts; moreover, this is innovating the way we write software: instead of carefully control every single step, now you babysit a untamed monster, probabilistic in nature, relying on the monster to think on its own and execute. To me if played right, this brings human creativity and productivity to another level:

- Think about how many anti-human programming languages we still have to deal with today: Verilog, C, bash scripts, how much time is wasted on nitty-gritty’s of software cuz it has 0 thinking unless told exactly what to do.

- Think about all those nonsense template and API abstractions

- Think about WebGPT, without LLM doing the planning & execution, such an application will be impossible to implement with traditional software tools.

A complete prompt trace of ReAct is shown below, here Search[keyword] indicating tool use, when the model decodes such a phrase, an external API call is issued to fetch “observations”. “Lookup” means a secondary internal retrieval API that processes documents fetched from external APIs (Google/Bing). Such internal Tools/APIs are not as well-known for the LLMs, so more prompts are likely needed to teach LLM how to use these “new tools”.

ReAct

Question What is the elevation range for the area that the eastern sector of the

Colorado orogeny extends into?

Thought 1 I need to search Colorado orogeny, find the area that the eastern sector

of the Colorado orogeny extends into, then find the elevation range of the

area.

Action 1 Search[Colorado orogeny]

Observation 1 The Colorado orogeny was an episode of mountain building (an orogeny) in

Colorado and surrounding areas.

Thought 2 It does not mention the eastern sector. So I need to look up eastern

sector.

Action 2 Lookup[eastern sector]

Observation 2 (Result 1 / 1) The eastern sector extends into the High Plains and is called the Central Plains orogeny.

Thought 3 The eastern sector of Colorado orogeny extends into the High Plains. So I

need to search High Plains and find its elevation range.

Action 3 Search[High Plains]

Observation 3 High Plains refers to one of two distinct land regions

Thought 4 I need to instead search High Plains (United States).

Action 4 Search[High Plains (United States)]

Observation 4 The High Plains are a subregion of the Great Plains. From east to west, the

High Plains rise in elevation from around 1,800 to 7,000 ft (550 to 2,130

m).[3]

Thought 5 High Plains rise in elevation from around 1,800 to 7,000 ft, so the answer

is 1,800 to 7,000 ft.

Action 5 Finish[1,800 to 7,000 ft]

… (more few-shot demonstrations)

2 Observations coming out of these In-Context-Learning (ICL) methods:

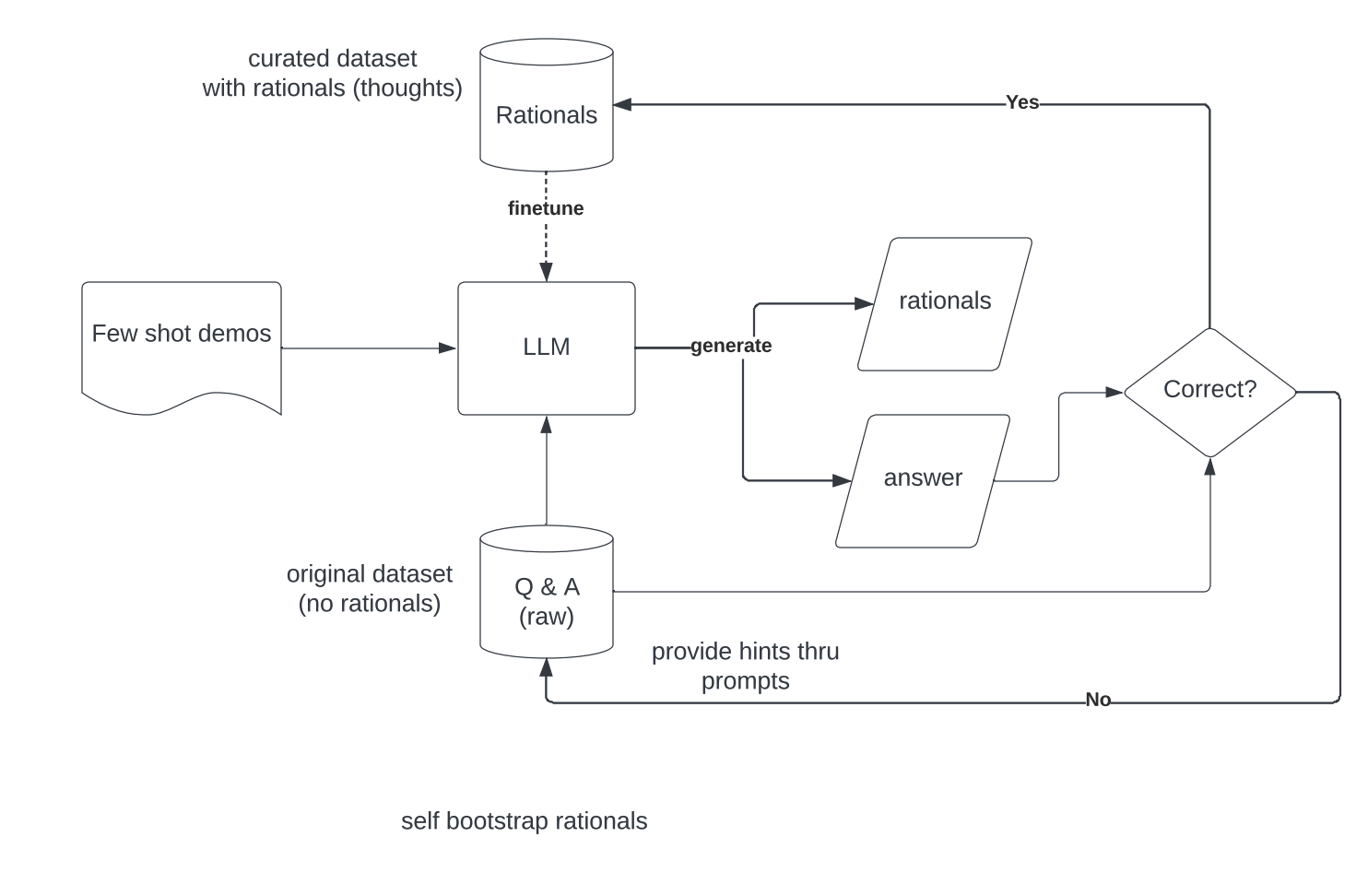

- How do we craft these thought prompts? For a new case/task, do we need to tailor a specific prompt for each task? The answer is yes, for each task you prolly need to go thru multiple trial-and-err – automated or not, until you can have LLM to reliably generate thoughts/steps to arrive at the final destination, see Star using self-bootstrapping to curate the “thoughts” database.

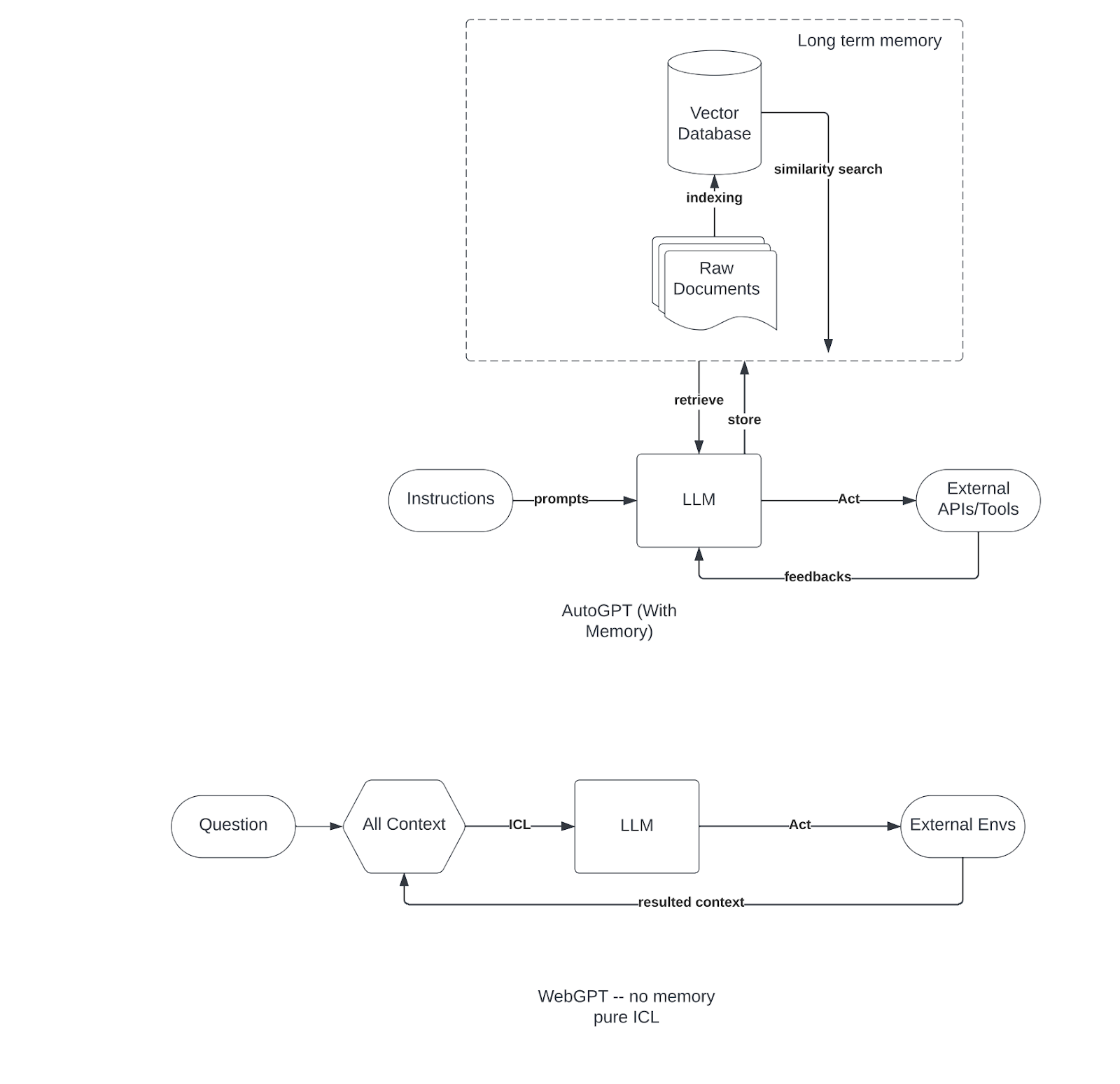

- Managing LLM’s state. This can be done with either pure In-Context-Learning (ICL), which is appending everything action-results to model’s context window (then it follows how to expand LLM’s context window which is another topic); or save/load from external memory services, such as “vector store”. Examples of the former is WebGPT, while the latter is AutoGPT, which stores long-term memories in external services like PineCone.

Another direction is use of fine-tuning dataset to improve planning, Minerva (https://arxiv.org/pdf/2203.14465.pdf) has demonstrated good fine-tuning dataset can improve this step-by-step thought capability.

Prompting is not fun, especially putting up those few-shot examples. Can LLM keep improving its 0-shot reasoning as the scaling-law still holds (for more discussion of scaling-law, see my post on LLM-pretraining)? As of mid-2023, GT4 really proves this: not only GPT4 can solve all the failed Blocksworld examples by GPT3 (see https://arxiv.org/pdf/2206.10498.pdf) zero-shot near perfect (tried a few myself), but it can also recurrently probe itself to generate reasoning paths and completely solve a task E2E without human intervention (see GPT4 interpreter) – many of the common GPT4 weakness, like it really struggles with word puzzles like wordladder (to accurately to plan ahead of time: look-ahead ability, and simulate recursive/backtrack), calculation errs like division (simulate arithmetics) — have to admit these are human weakness as well — are solved with code interpreter. This is just like what humans did, human struggles to calculate especially when numbers are big and steps are long, and that’s how computers and imperative programming are invented to do these for us.

To augment SoTA LLMs, good or worse, a hybrid system may emerge in the future, in this blog by openAI, the author depicts an orchestration of agents that handle memory, planning and execution each (see picture above). To my speculation, ChatGPT is already using such a system in production, one proofpoint is that we’re often seeing OpenAI hotfix bugs instantly, it’s hard to believe they can retrain the main model in such short amount of time, there could be “hotfix models” that are much easier to retrain/fine-tune — however this is pure speculation, alternatively they might already find a way to make their instruction-fine tuning 100% controllable and super efficient that they can fine-tune their main model instantly (a few hundred steps?) for each hotfix.

Twist of Thoughts: How Can RL Help?

Would it be too crazy to believe the multi-step planning of LLMs can reach human level in the next 2 years? Will Reinforcement Learning help unlock this capability?

We’ve all seen how DeepMind’s AlphaGo/AlphaStar has achieved unbelievable planning skills far beyond humans. From disclosed details we know AlphaGo relies on Monte-Carlo-Search-Tree (MCTS) as data structure to model player+opponents’ states, and RL as the learner to optimize player’s moves. The question is whether success in sequential decision making can generalize to all sorts of planning scenarios. We know AlphaGo provides no value to anything else than the game of Go, b/c it has no ability to perceive other tasks or any world knowledge about anything else other than Go. It is reasonable to believe LLM can serve as the “perception” layer for AlphaXXX using its common-sense etc. world knowledge, once the planning agent is successfully “engaged” – meaning the concept of the task is successfully transfer to a sequential decision making process (MDP) where the planning agent excels at, it can unleash the superpower of AlphaXXX to beat human far away on universal planning tasks. We know nowadays robots are used almost 100% in the factory b/c they are programmed to exactly receive this form of input sensors and output exactly those types of actions. If we want them to work in our homes as a service bot, how were you able to update them to daily chores? How will they react to random new stuff popping up each day? Now imagine you have a robot that can literally understand anything, you can just let-go and let him work out on his own – this will truly unlock service bot to massive adoption. This is such an amazing future picture, something I believe DeepMind has a huge vision for (and prolly already crazy working on it).

Math

Math is such a big area, here we scope down to only symbolic reasoning and arithmetics.

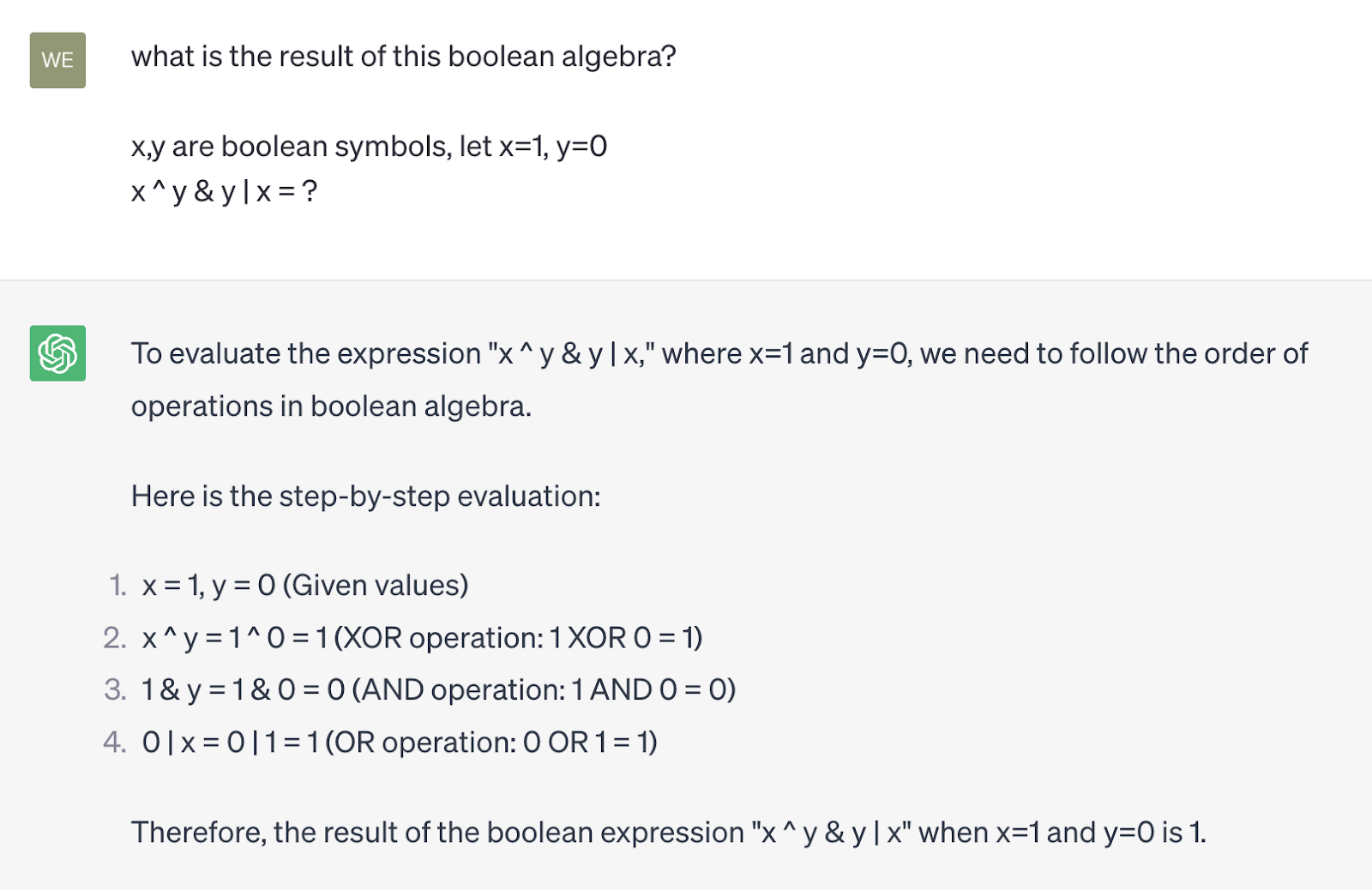

- Symbolic reasoning: there are many of such public benchmarks. Encouraging LLMs to think step by step (CoT) are proven to significantly improve performance on some of the benchmarks (see Chain-of-Thought paper, but the 2 tasks are very simple). I also tried to evaluate a few boolean expressions on GPT4 and it’s 100% accurate: it provides calculation at each step, demonstrating it’s been trained with CoT like process to product answers for such reasoning tasks:

- Arithmetics: in the narrow sense, this is just a calculator. With good fine-tuning strategy, not necessarily RL (or not even a powerful LLM) you can have LLM to behave exactly like a calculator: breaking down the numbers into digits, and performing carry bits etc, see my previous post. One observation is that GPT4 etc is prone to make arithmetics mistakes when the numbers are large, this is also discussed in the previous multi-step planning section.

People also have high hopes on LLMs solving college level math that are hard for humans in the first place. One noticeable work in this direction is Minerva – Minerva’s methodology is highly correlated with “coding” that we’re going to talk about next. Minerva has already shown good math reasoning can be achieved with curated math datasets. It’d be interesting to see if RL can further improve LLM’s math derivation ability with reward signals. In my experience, GPT4 can solve unary quadratic and cubic equations very well – using existing techniques it learned on the Web. Although the result calculation is often wrong, which is now mitigated by using external calculators like GPT Code Interpreter. An AGI Evaluation of GPT4 has similar findings, quote:

The conversation reflects profound understanding of the undergraduate-level mathematical concepts discussed, as well as a significant extent of creativity. Although GPT-4 makes a mistake in one instance (writing 2n−1 rather than 2n/2 ), the subsequent conversation demonstrates that this error does not reflect a lack of understanding Rather, GPT-4 seems to have made an error in a manner 41 similar to a human typo, as it later provides the correct generalization of the formula

For harder and harder math problems, learning a policy from reward signals seems far from enough. There needs to be some creativity when solving a math problem: LLM is trained from a massive amount of math corpus, so it’s pretty good when solving problems with existing formulas, but when seeing a fresh new problem, it flops. We humans struggle at math exactly for this reason – when we already can map a problem to available formulas, we can solve it with ease; but when no existing formula is available we suffer from creative thinking.

Coding

What is the ability to code? There are many capability axes associated to coding:

- First, you need to understand programming language itself, syntax is always easiest, think about advanced topics like multithreading, object-oriented, build/packaging/deployment;

- Second, you need to understand data structure and algorithms — a hard topic for any human being as well;

- Now you have everything in your toolbox, you’re ready to code; you need to understand your high level goal: Is it supported by the current programming language? What are the dependencies/libraries? All those need to be answered before you actually start coding.

- Now you really start to code, the situation really varies: sometimes you need to breakdown a single requirement into multiple scenarios hence implemented with sub-modules (divide and conquer), this also includes writing test for each module; sometimes you’re doing an adapter, essentially converting from one interface to another, so you just follow the spec and wire the arguments from one interface to another; sometimes you’re creating the “core” implementation — often referred to as “engine” — an algorithmic heavy component to do heavilyfitted processing of informations: the core algorithm could be the physics engine in CG, could be indexing/sorting for efficient retrieval of relevant information. One interesting fact is that these core “engines” are way more than what you can see from the surface – they bear highly distilled understanding of the actual use cases, they are extremely performant which connects to the underlying hardware.

SOTA LLM’s coding benchmarks, e.g. HumanEval is more like coding challenge tasks: given a description, which contains all the necessary context you need, write the algorithmic implementation, there are also standard test cases to verify. This is mainly testing the “data structure and algorithm” capability axis mentioned above. GPT4 already has a very impressive 80% pass@1 rate on HumanEval and 60% pass@1 on LeetCode (see AGI evaluation of GPT4 ), which well exceed most of the entry level software engineers.

The industry is very excited about LLM coding, a few use cases I observed are:

- text2sql, this is a much needed feature for most of the data analysis. For simple query generation it can be solved with ease, for complex queries, it’ll be hard to explain in plain language all those join statements in SQL to LLM. Ideally you want LLM to act as data analyst independently, more than just converting text to sql, this has already been preliminary demonstrated in GPT Code interpreter – not only it’s been able to follow the instruction, but it’s creating its own “analysis” of the data using creative thinking.

- Web frontend development. Frontend/UI is classic example of “implementation gap”, it doesn’t require high skilled data structure/algo, the requirement is usually very clear and unambiguous; it’s really like 1-step implementation, almost like copying from a large template with many many knobs to tune (color, css, jquery all those UX trash), humans are always limited by their short-term memory – this is why a proficient web frontend developer can be 10x more productive than entry level. I wouldn’t be surprised if existing LLMs can generate UX gadget javascript code perfectly well. As you dig more into E2E web development, all the “coding capability axes” will appear, such as interacting with backend etc, which becomes more challenging for LLMs.

As of 2023 LLMs are still used in its capability on data structure & algorithms. This marks the success of Github GoPilot and Replit, these companion bots can already improve developer’s productivity by 10x in many cases. To go one step further, beyond data structure and algorithms, to have LLM actually act as a software engineer, still a few I can see to key breakthrough is required:

- Being able to learn new use cases, understand new requirements, and map them to software design. This is what makes a good software engineer, truly graduate student level intelligence. It’s also about creativity – think about developing a real time messaging app, do my customers need delivery receipts? If so, do they want “sent”+”delivered”? or just “sent”? What are the performance trade-offs? Luckily, the majority of software development is duplicate work: we typically have a thing called “template”, or even more often just copy-pasting existing code with small modifications, for example, writing a website, or training a ML model. All the requirements → implementations are very similar. This is how LLM really jumps in, assuming it doesn’t have graduate level intelligence, but it can follow a template and copy paste from exemplar implementations. Of course the risk goes with this is that without know-how, it might do stupid things. So a human “supervisor” or QA (quality assurance) engineer is still relevant.

- Being able to plan large software projects, break it down to smaller components, how they orchestrate. Not essentially different from 1, but here we would assume the person is purely “implementing” when the software spec is already written (the wrongest assumption I could ever think of, as constant syncs are needed between the “designer” and “the implementer”, and often they are the same person). This is more advanced than multi-step planning, b/c it involves deep understanding of the programming language and sometimes the underlying hardware. A good software engineer is always, always judged by the glory or shitty piece of code he’s written. Such “coding” difficulty varies a lot, sometimes it’s super human, think about the godly person written git, or the Unreal Engine. Sometimes the process is very well defined and can follow existing templates – here LLM comes, bingo!.

- Being able to debug, in software engineering,“fixers” are always the hidden legend; it’s a very special role, it requires some very particular intelligence there. The more complex the system is, the harder the job for the fixer. It’d be most interesting to see how LLM can perform as a fixer: it involves “learning” the code. GPT4 has already demonstrated amazing skills debugging snippets of codes. The question is whether LLMs can quickly learn from a large amount of context information (in real production scenarios). Supervised Fine-tuning should play a major role here, if the LLM can be finetuned on accurate debugging info, it can address the majority of the production errors.

Whirlwind of Thoughts – AGI Coder

Eventually with the coding ability unlocked, we can go further crazy and imagine AI creating its own tool when facing compatibility issues, breaking all the version control shenanigans; and even more break the “abstraction nightmare”: if everything is so transparent and inline for AI, why we still need those nonsense design patterns and software abstractions? Why do we need all the structured input and convert them back-n-forth? (maybe one day the question being why do we need software engineers?).

In the next 2 years, it’ll be very exciting to see LLM+RL help discover strong data structures and algorithms that beat history-long designs similar to what has been achieved in AlphaFold. In fact I’m mostly interested to learn why DeepMind didn’t tackle coding challenges before games and protein discovery challenges. Could it be the case that coding ability can only be “unlocked” as we scale up the transformers model? If this is true, we would imagine LLM to perform universal software engineering tasks, otherwise, we would imagine a combination of SFT+prompting to help tackle different coding problems.

Loose Ends

As many existing prompt-based planning works have demonstrated, learning from trial-and-err is an important capability for LLM. Similar to humans, LLM should be able to learn from these trial-and-errors for domain specific tasks instead of repeating the errors over and over again. RL can be an effective way to learn from these samples. Note that RL does not necessarily mean sample efficiency – it still requires a large amount of trial-and-err before it finds ways to increase rewards, as opposed to “learning from a few demonstrations just as humans do” that people would imagine the “alignment” process to be. To this end, Instruction-finetuning seems more sample efficient than RL, plus policy gradient methods will suffer a lot more on training loss stability. The “alignment” topic stays mythical and RLHF is just a particular technique that only cracks a tip of the iceberg.

In the ideal state, we don’t need to pedantically tell AI what to do just like we craft incredibly verbose prompts to it today. It kind of works on its own, learning from trial-and-errs, guided by its decision making driven by self-awareness. A hybrid multi-agent system is depicted in Lilian Weng’s Blog which seems achievable with SOTA LLMs. It’d be interesting to see the development of project AutoGPT to track how this line of work can progress up to.

When facing hallucination, the current solution poked from related works indicating massive data collection and creating a fantastic “reward model” (Human Preference Model) can eventually break the deal. This is less to do with LLM “alignment” but rather addressing the vulnerabilities of the LLM under attacks.

When facing complex reasoning subjects, we can see a number of LLMs developed for highly advanced scientific topics using its improved reasoning capabilities. We can also moderately predict LLMs acting as ops engineers/oncalls for us in the near future, debugging systems that would be annoying for humans to fix. RL is not particularly relevant in this area, we look forward to the continued upscaling of LLMs (to trillions parameters landmarks as of 2023 end) hence possibly unlocking more complex reasoning capabilities, such as advanced coding skills, planning complex tasks.

To rebut the previous point, one also argues that complex reasoning does not need sledge hammer scaling laws, RL can be the magic wand just like it did in AlphaGo/AlphaStar. So, what is DeepMind up to….